My first steps with DALL-E 2

A few days ago, I finally got access to DALL-E 2. The first thing I did was to turn to my partner and ask her for the most bizarre prompt she could think about. The output was:

A kangaroo with an eye patch playing castanets in front of the Eiffel Tower

Well, that was actually… very disappointing. I quickly tried a second one:

A wolf riding on a motorcycle through the desert

WTF! That looks even worse!

Was I using the real DALL-E 2 or some kind of toned-down version? I used a prompt from the original paper to check whether the results were comparable:

A shiba inu wearing a beret and black turtleneck

Well, that actually looks amazing, just like in the paper. Let’s try to change the prompt a bit:

A wolf wearing a beret and black turtleneck

What?! How is that possible? How can quality drop so much by just swapping a single word? At this point, conspiracy theories started to form in my mind: could it be possible that the examples on the DALL-E 2 paper were so heavily cherry-picked that even the slightest change causes the model to fail? This is an academic scandal, I will expose them and win a Pulitzer!

And then I tried this:

A wolf wearing a beret and black turtleneck, digital art

and this:

A high-quality photograph of a wolf wearing a beret and black turtleneck, 4k

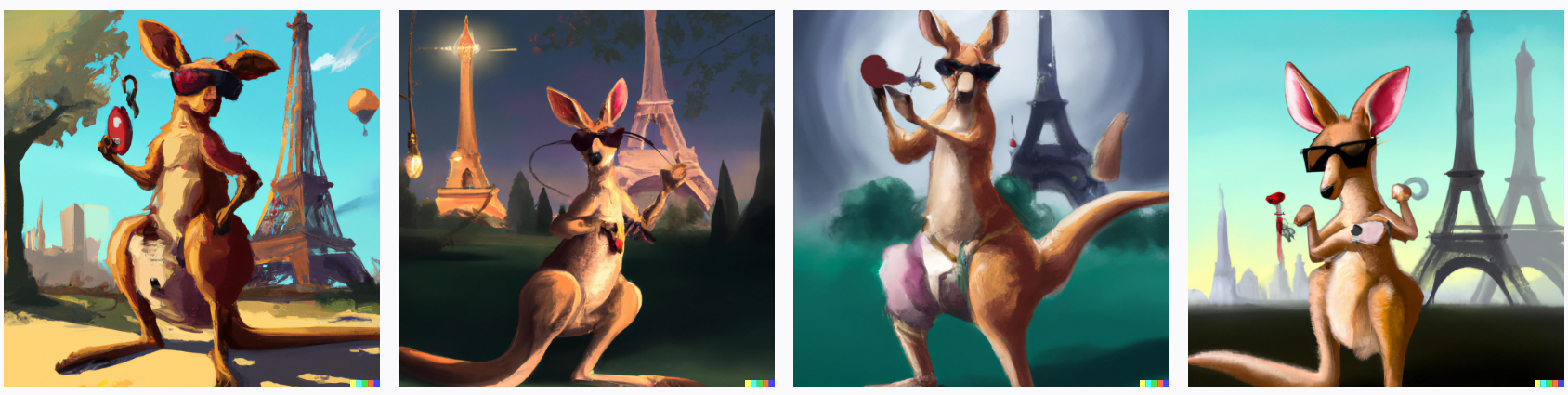

Those look much better indeed. My theories were a bit less wild now: a dog wearing some clothes is something that you can find in real life. My other prompts, on the other hand, were highly implausible. It seems that when you ask for something really weird you need to provide DALL-E with some more guidance by adding modifiers (we covered modifiers in depth in a previous post).

Here is the digital art kangaroo just for your viewing pleasure:

Recreating a classic

That was fine to get a better grasp of how DALL-E 2 works. However, I’m not as interested in the ability of these kinds of models to generate memes as I am in their possibilities as creative tools. I decided to do a little experiment: a looong time ago, I used to play around with 3D rendering and made this piece (and trust me, I’m really embarrassed to share it now):

I tried to recreate it using DALL-E 2. It took some tinkering around with the prompt, but I ended up being quite happy with the result. It has some rainy day mood indeed:

Conclusion

I like what DALL-E 2 can do, but I have not been utterly impressed by it. Some freely available models will likely improve upon DALL-E 2 in the near future.

What I find most fascinating about art-generating models are the endless possibilities they bring in the co-creation between the artist and the model. I think we have already reached the point where these models can be a really useful tool for any artist (digital or not). Think of the little experiment I did: it could be an easy way to quickly explore different compositions or color schemes, even if you were to later produce the final artwork using traditional media. You don’t need to be a digital artist, or an AI artist, to benefit from DALL-E 2 and the like.